Introduction

As financial technology evolves, the need for efficient Software as a Service (SaaS) applications for Non-Banking Financial Companies (NBFCs) becomes paramount. EMI (Equated Monthly Installment) collection systems, which handle sensitive data and must scale with demand, require robust, flexible, and resilient architecture. In this article, we’ll explore how to optimize an EMI collection SaaS using Kubernetes, Puppet, and a microservices architecture, addressing common challenges such as scalability, resilience, and security.

Understanding the Problem

The traditional monolithic architecture presents several limitations that hinder the performance of EMI collection applications:

- Monolithic Architecture: All components are tightly coupled, causing a single point of failure and making scalability difficult.

- Resource Constraints: Insufficient CPU, RAM, or other resources can lead to poor performance under high load.

- Inefficient Code: Poorly optimized code handling large datasets and concurrent requests can slow down the system.

- Concurrency Issues: The inability to handle concurrent Excel file uploads and processing efficiently.

To address these issues, we propose breaking down the monolithic architecture into microservices, leveraging Kubernetes for container orchestration, Docker for containerization, and Puppet for configuration management.

Solution: Leveraging Kubernetes, Puppet, and Microservices

1. Adopting a Microservices Architecture

Microservices involves decomposing the monolithic application into smaller, independent services, each responsible for a specific functionality. Here’s how microservices can optimize your EMI collection SaaS:

- Scalability: Each service can scale independently based on the load, enabling efficient use of resources.

- Resilience: Failure in one service does not affect others, improving overall system stability.

- Technology Agnostic: Each service can use the most suitable technology, allowing for more flexibility in development.

Core Microservices for EMI Collection SaaS:

- API Service: Handles API requests from the front-end or mobile apps.

- Excel Processing Service: Manages Excel file uploads and processing.

- Receipt Generation Service: Generates payment receipts post-collection.

- Notification Service: Sends notifications (SMS, email) to customers and agents.

- User Management Service: Handles authentication and user management.

2. Containerization with Docker

Docker is a containerization platform that packages each microservice into a standalone container, ensuring that each has all the necessary dependencies and configuration.

- Isolation: Each microservice runs in its own container, providing consistency across environments.

- Portability: Containers can be easily deployed on different platforms without compatibility issues.

- Efficiency: Containers start up quickly and consume fewer resources than traditional virtual machines.

Example Dockerfile for the Excel Processing Service:

FROM python:3.10

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

CMD ["python", "app.py"]3. Kubernetes for Orchestration

Kubernetes automates the deployment, scaling, and management of containerized applications, offering several key features for an optimized EMI collection SaaS:

- Automatic Deployment and Scaling: Kubernetes can deploy containers based on defined configurations and scale them up or down as needed.

- Load Balancing: Distributes network traffic across multiple service instances, ensuring high availability and performance.

- Self-Healing: Automatically replaces failed containers, maintaining application stability.

- Horizontal Scaling: Allows for the addition of more instances to handle increased load.

Example Kubernetes Deployment YAML for Excel Processing Service:

apiVersion: apps/v1

kind: Deployment

metadata:

name: excel-processing-service

spec:

replicas: 3

selector:

matchLabels:

app: excel-processing

template:

metadata:

labels:

app: excel-processing

spec:

containers:

- name: excel-processing

image: my-registry.example.com/excel-processing:latest

ports:

- containerPort: 8080

resources:

limits:

cpu: 2

memory: 2Gi

requests:

cpu: 1

memory: 1Gi4. Puppet for Configuration Management

Puppet is a configuration management tool that automates the provisioning and management of infrastructure, ensuring a consistent environment across all deployments.

- Infrastructure as Code (IaC): Define the desired state of your infrastructure using code, which makes provisioning reproducible and reliable.

- Automation: Automate routine tasks like installing software, configuring services, and patch management.

- Consistency: Maintain uniformity across all environments, reducing the chances of configuration drift.

Example Puppet Manifest for Installing Docker:

package 'docker' do

ensure => 'installed'

end

service 'docker' do

ensure => 'running',

enable => true

end5. Implementing Asynchronous Processing

To optimize Excel processing further, use a message queue (like RabbitMQ or Kafka) to handle uploads asynchronously. This ensures that the main API remains responsive while heavy processing tasks are managed in the background.

- Worker Nodes: Dedicated nodes pull tasks from the queue and process them independently, preventing any single task from blocking the main application.

- Batch Processing: For large datasets, process in batches to optimize resource usage.

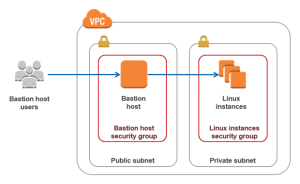

6. Ensuring Security and Data Isolation

Security and data privacy are critical in an EMI collection SaaS:

- Data Isolation: Each client’s data should be isolated to prevent unauthorized access.

- Encryption: Encrypt sensitive data both at rest and in transit to protect it from breaches.

- Authentication and Authorization: Use strong authentication methods (like OAuth, JWT) and enforce fine-grained authorization controls.

7. Continuous Integration and Continuous Deployment (CI/CD)

Implement a robust CI/CD pipeline to automate the building, testing, and deployment of microservices. Tools like Jenkins, GitLab CI, and CircleCI can help ensure that any code changes are rigorously tested before being deployed to production.

8. Monitoring and Optimization

- Centralized Logging: Use centralized logging solutions like ELK Stack or Grafana Loki to gather logs from all microservices for analysis and debugging.

- Monitoring Tools: Use Prometheus and Grafana to monitor application health, resource usage, and performance metrics.

- Alerts and Notifications: Set up alerts for critical events, such as service outages or high error rates, to enable prompt responses.

9. Optimizing Database Management

A crucial aspect of any SaaS platform, especially for financial applications like EMI collection, is efficient database management. Handling large volumes of client data requires a strategy that maximizes performance while ensuring data integrity and security.

Strategies for Database Optimization:

- Separate Databases for Clients: Each client should ideally have its own database instance to ensure data isolation. This approach also allows for more granular control over database scaling, backups, and security policies.

- Database Sharding: For clients with large datasets, implement sharding to split the data across multiple databases. Sharding helps distribute the load and improves performance by ensuring that queries run faster.

- Read Replicas: Use read replicas to handle read-heavy operations without overloading the primary database. This setup is particularly useful for reporting and analytics services that need to access data frequently.

- Connection Pooling: Implement connection pooling to manage database connections more efficiently. This can reduce latency and resource consumption, particularly under heavy loads.

10. Enhancing Security and Compliance

Security is paramount in EMI collection systems due to the sensitive nature of financial data. Ensuring robust security measures and compliance with regulations like GDPR, PCI DSS, or other relevant standards is critical.

Key Security Measures:

- Secret Management: Use tools like Kubernetes Secrets or HashiCorp Vault to manage sensitive information such as API keys, database credentials, and other confidential data securely.

- Network Policies: Define Kubernetes network policies to restrict traffic between services, ensuring only necessary communication paths are open. This reduces the attack surface and prevents unauthorized access.

- TLS Encryption: Enforce TLS encryption for all data in transit to protect it from interception. For data at rest, use strong encryption algorithms provided by your database or storage solutions.

- Role-Based Access Control (RBAC): Implement RBAC to restrict access to resources based on user roles, ensuring that only authorized users can access sensitive data or perform critical operations.

- Regular Security Audits: Conduct regular security audits and penetration testing to identify and mitigate vulnerabilities proactively.

11. Monitoring and Observability

To maintain the health and performance of your EMI collection SaaS, you need comprehensive monitoring and observability practices. These practices help you detect issues early, understand their root causes, and respond quickly.

Tools and Practices for Monitoring:

- Prometheus and Grafana: Use Prometheus for collecting and querying metrics, and Grafana for visualizing those metrics. These tools can monitor various system aspects, such as CPU usage, memory consumption, request latency, and error rates.

- Distributed Tracing: Implement distributed tracing (e.g., with Jaeger or OpenTelemetry) to track requests across microservices. This helps in pinpointing performance bottlenecks and understanding how different services interact.

- Log Aggregation: Centralize logs using tools like the ELK Stack (Elasticsearch, Logstash, Kibana) or Loki. Centralized logging allows for efficient searching, analysis, and correlation of log data across services.

- Alerting: Set up alerting based on key metrics or log events to notify you of potential issues before they impact users. For example, you could alert on high error rates, unusual latency spikes, or resource exhaustion.

12. Robust Testing Strategy

Testing is a critical component in a microservices architecture due to the complexity of service interactions and dependencies. A robust testing strategy ensures that your system remains reliable and performs well as it scales.

Types of Testing to Implement:

- Unit Testing: Ensure each microservice functions correctly in isolation. This helps catch bugs early in the development cycle.

- Integration Testing: Test the interactions between services to ensure they work together as expected. This is crucial for identifying issues that may not be apparent when testing services in isolation.

- End-to-End Testing: Simulate real-world scenarios to test the complete application workflow. This helps validate that the application meets user requirements and performs well under typical usage conditions.

- Performance Testing: Assess how well your system handles various loads to ensure it meets performance requirements. Tools like Apache JMeter or Gatling can be used to simulate high-traffic conditions and identify potential bottlenecks.

- Security Testing: Conduct security tests to identify vulnerabilities in your system. This includes both static analysis (checking code for vulnerabilities) and dynamic analysis (testing the running application).

13. Continuous Improvement and Iteration

Once the initial architecture and deployment are in place, the work does not stop. Continuous improvement is key to maintaining a high-performance, scalable, and secure system.

Strategies for Continuous Improvement:

- Feedback Loops: Establish feedback loops with users and stakeholders to gather input on system performance, usability, and features. Use this feedback to prioritize improvements and new features.

- Regular Retrospectives: Conduct retrospectives with your development and operations teams to review what’s working well and where there are opportunities for improvement. This helps ensure that the team continually learns and evolves.

- Automated Audits and Health Checks: Implement automated audits and health checks to continuously monitor the system’s compliance with security standards, performance benchmarks, and operational best practices.

- Adopting New Technologies: Stay updated on emerging technologies and best practices in cloud computing, container orchestration, and microservices. Be ready to adopt new tools or methodologies that can enhance your application’s performance, security, or scalability.

14. Asynchronous Processing and Event-Driven Architecture

To optimize performance and scalability further, consider adopting an event-driven architecture. This approach is particularly useful for handling asynchronous tasks that do not require immediate user feedback, such as processing large Excel files or generating receipts.

Benefits of an Event-Driven Architecture:

- Decoupling Services: In an event-driven model, services communicate through events rather than direct calls. This decoupling reduces dependencies and allows services to evolve independently without impacting the overall system.

- Scalability: Events can be processed by multiple consumers simultaneously, allowing you to scale processing based on demand. For instance, if there’s a spike in Excel file uploads, additional instances of the processing service can be spun up to handle the load.

- Improved Responsiveness: By processing tasks asynchronously, the system can respond to user actions faster, as tasks that do not need immediate feedback are queued for later processing.

Implementing an Event-Driven Architecture:

- Event Brokers: Use message brokers like Apache Kafka, RabbitMQ, or AWS SQS to manage event queues. These tools provide a reliable way to ensure messages are delivered and processed in the correct order.

- Asynchronous Services: Develop services that can handle events asynchronously. For example, an Excel processing service could listen for file upload events and begin processing them independently of other services.

- Event Sourcing: For critical data changes, consider using event sourcing, where state changes are stored as a sequence of events. This approach not only provides a full audit trail but also allows you to reconstruct the state of any service by replaying events.

15. Implementing Advanced Load Balancing and Service Discovery

Effective load balancing and service discovery are essential for managing traffic efficiently in a microservices architecture.

Load Balancing Strategies:

- Round Robin: Distributes traffic evenly across all instances of a service. This is a simple yet effective method for balancing load.

- Least Connections: Directs traffic to the instance with the fewest active connections. This approach is beneficial when traffic is unevenly distributed across instances.

- Geographic Load Balancing: Routes traffic based on geographic location to reduce latency. For global applications, this ensures users are connected to the nearest data center or cloud region.

Service Discovery:

- Service Registry: Use a service registry (e.g., Consul, Eureka) to keep track of all service instances and their locations. This ensures that services can discover each other without hard-coded IP addresses or endpoints.

- Sidecar Pattern: Deploy a sidecar container alongside each microservice instance to handle service discovery and load balancing. The sidecar communicates with the service registry and handles requests appropriately.

16. Advanced Configuration Management with Puppet

While Puppet provides a robust platform for managing infrastructure as code, leveraging its full capabilities can enhance your application’s reliability and security.

Advanced Puppet Techniques:

- Hiera for Configuration Data Management: Use Hiera, Puppet’s built-in key-value configuration data lookup tool, to manage configurations more dynamically. This allows for a separation of code and configuration, enabling different environments (development, staging, production) to use the same Puppet manifests with different settings.

- Puppet Bolt for Orchestrating Tasks: For tasks that require execution across multiple systems, use Puppet Bolt. Bolt allows you to execute ad-hoc tasks, such as restarting services or applying patches, across your infrastructure without writing a Puppet manifest.

- Automated Compliance and Security Audits: Use Puppet to enforce compliance with security policies and best practices. By defining these requirements in Puppet code, you can automatically detect and remediate any configuration drift or security issues.

17. Building a Robust CI/CD Pipeline

Implementing a robust Continuous Integration/Continuous Deployment (CI/CD) pipeline is critical for automating the development and deployment process, ensuring that new features and bug fixes are delivered quickly and reliably.

Key Components of a CI/CD Pipeline:

- Automated Builds: Trigger builds automatically whenever code is pushed to the repository. This ensures that every code change is integrated and tested early, reducing the risk of integration issues later.

- Automated Testing: Run unit, integration, and end-to-end tests as part of the build process. Automating tests helps catch bugs early and ensures that changes do not break existing functionality.

- Continuous Deployment: Automatically deploy changes to the staging environment upon passing tests. This allows for immediate feedback from stakeholders and faster iteration.

- Canary Releases: Use canary releases to deploy changes to a small subset of users first. This approach helps detect potential issues in a controlled environment before a full-scale release.

- Rollback Mechanisms: Implement rollback mechanisms to revert to the previous stable version if a deployment fails. This ensures that any issues do not impact users for an extended period.

18. Optimizing for Cost Efficiency

While optimizing for performance and scalability is crucial, managing costs is equally important in a SaaS environment. Balancing performance with cost efficiency ensures you maximize value without overspending.

Strategies for Cost Optimization:

- Right-Sizing Resources: Regularly monitor resource usage and adjust resource allocations accordingly. This may involve reducing the number of replicas, resizing containers, or using spot instances for non-critical workloads.

- Autoscaling Policies: Use Kubernetes autoscaling policies to scale resources up and down based on demand. This ensures you are only using resources when necessary, reducing costs during low-usage periods.

- Reserved Instances: For long-term workloads, consider using reserved instances or savings plans to reduce compute costs. Cloud providers offer significant discounts for committing to using specific resources over time.

- Optimized Storage Solutions: Choose storage solutions that match your use case. For example, use SSD storage for high-performance needs and object storage for large amounts of infrequently accessed data.

19. Implementing a Service Mesh for Microservices Communication

A service mesh can enhance the management of microservices communication by providing advanced networking features, such as traffic management, security, and observability, without requiring changes to application code.

Benefits of a Service Mesh:

- Traffic Management: Control the flow of traffic between services, allowing for fine-grained traffic shaping, A/B testing, and blue-green deployments.

- Security: Enforce security policies, such as mutual TLS for service-to-service communication, and manage service identities centrally.

- Observability: Gain deep insights into microservice communication with distributed tracing, metrics, and logging. This helps in quickly diagnosing issues and understanding service dependencies.

Implementing a Service Mesh:

- Choose a Service Mesh: Popular options include Istio, Linkerd, and Consul. Each has its strengths, so choose one that aligns with your specific needs and ecosystem.

- Deploy the Service Mesh: Deploy the service mesh alongside your existing microservices in Kubernetes. Most service meshes use a sidecar proxy pattern, deploying a proxy alongside each microservice instance.

- Configure Policies: Define and enforce policies for traffic routing, security, and observability within the service mesh. This might include setting up circuit breakers, retries, and rate limits.

20. Scaling Horizontally and Vertically

Scaling strategies should not be limited to one dimension. Both horizontal and vertical scaling have roles in optimizing the performance and capacity of your application.

Horizontal Scaling:

- Increase Instances: Add more instances (pods in Kubernetes) of a microservice to handle additional load. This is ideal for stateless services where each instance operates independently.

- Sharding: Distribute load by sharding data across multiple databases or storage backends, allowing horizontal scaling of data handling.

Vertical Scaling:

- Resource Scaling: Increase the resources (CPU, memory) allocated to a service or database instance to improve performance for resource-intensive operations.

- Use Vertical Pod Autoscaler (VPA): In Kubernetes, use the Vertical Pod Autoscaler to adjust resource limits for containers based on their actual usage patterns.

Conclusion

Implementing the strategies outlined will significantly enhance the scalability, resilience, and efficiency of your EMI Collection SaaS. By adopting an event-driven architecture and utilizing advanced configuration management and scaling techniques, you move closer to a modern, optimized system that aligns with both user needs and business objectives. Continuous monitoring, testing, and improvements are essential to ensure your application remains robust, secure, and capable of handling future challenges.

This comprehensive approach guarantees that your SaaS platform is not only efficient and scalable but also secure, cost-effective, and resilient. It’s designed to deliver a seamless experience for your clients while supporting your business goals.

Transitioning to a microservices architecture and employing tools like Kubernetes, Docker, and Puppet offers a powerful means to optimize your EMI collection SaaS. This modern approach enhances scalability, boosts resilience, and ensures robust security and compliance. Following these strategies will help you build a cloud-native application that meets the demands of today’s financial services sector, delivering reliable, efficient, and secure services to NBFCs and their clients.

By embracing continuous monitoring, testing, and ongoing improvement, you ensure that your system not only meets current requirements but is also well-prepared for future growth and challenges.

Adopting these strategies not only optimizes your system for current workloads but also future-proofs it for continued growth and evolution in the financial technology space. Transitioning from a monolithic architecture to a microservices-based model with Kubernetes, Docker, and Puppet will significantly enhance the performance, scalability, and reliability of your EMI collection SaaS, providing a robust, scalable, and secure environment tailored to the growing needs of NBFCs.

This detailed approach covers key aspects of optimizing your EMI collection SaaS, including database management, security measures, monitoring, testing, and continuous improvement.

Tags: SaaS, Kubernetes, Puppet, Microservices, EMI Collection, Docker, DevOps, Cloud Architecture, Scalability, Infrastructure as Code