Database sharding is a method of horizontally partitioning a database across multiple servers or nodes. In MongoDB, sharding involves dividing data into smaller, more manageable pieces called “shards” and distributing them across multiple servers, which are called “shardservers”.

When data is sharded in MongoDB, each shard contains only a subset of the data. The data is divided based on a shard key, which is a unique identifier for each document in the database. MongoDB uses the shard key to determine which shard a particular document should be stored on. This ensures that related data is stored on the same shard, which can improve query performance.

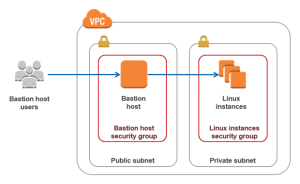

In MongoDB, sharding is achieved through the use of a cluster of three different types of nodes: config servers, shards, and mongos routers. Config servers store metadata about the sharded cluster, such as which shards contain which data. Shards hold the actual data, and mongos routers act as gateways between the application and the shards.

To enable sharding in MongoDB, the following steps are generally required:

- Set up a MongoDB cluster with at least three config servers, one or more shardservers, and one or more mongos routers.

- Choose a shard key for the data you want to shard. The shard key should be carefully chosen to ensure that related data is stored on the same shard.

- Enable sharding for the database and collection you want to shard using the “sh.enableSharding()” command.

- Specify the shard key when creating new collections and insert data into the collection. MongoDB will automatically distribute the data across the appropriate shards based on the shard key.

- Query the data using the mongos router, which will route queries to the appropriate shards and aggregate the results.

Sharding can help improve the scalability and performance of a MongoDB database by allowing data to be distributed across multiple servers. It can also help ensure high availability and fault tolerance, as data is replicated across multiple shardservers. However, sharding can also introduce additional complexity and management overhead, so it should be carefully considered and implemented only when necessary.

Sharding is a technique used to distribute large amounts of data across multiple machines in order to support high-throughput operations. When dealing with large data sets or high query rates, a single server may not be able to handle the load. This can lead to problems such as CPU exhaustion or I/O bottlenecks. To address this, there are two approaches to system growth: vertical and horizontal scaling.

Vertical scaling involves increasing the capacity of a single server by adding more powerful hardware components such as CPUs, RAM, or storage space. However, there may be practical limits to how much a single machine can be scaled. For example, cloud-based providers have limitations based on available hardware configurations.

Horizontal scaling involves dividing the system’s data set and workload across multiple servers, with each machine handling a subset of the overall workload. While each individual machine may not be as powerful as a high-end server, the overall efficiency can be improved due to the distribution of the workload. Additionally, expanding the capacity of the deployment only requires adding more servers as needed, which can be more cost-effective than investing in high-end hardware for a single machine. However, horizontal scaling also introduces increased complexity in infrastructure and maintenance.